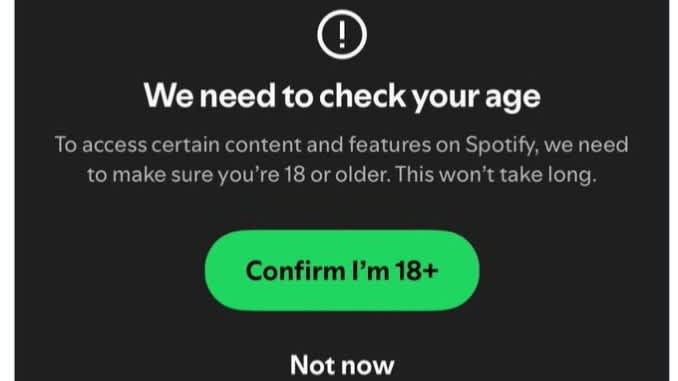

Spotify has warned that users’ accounts may be deleted if they fail to pass new age verification checks.

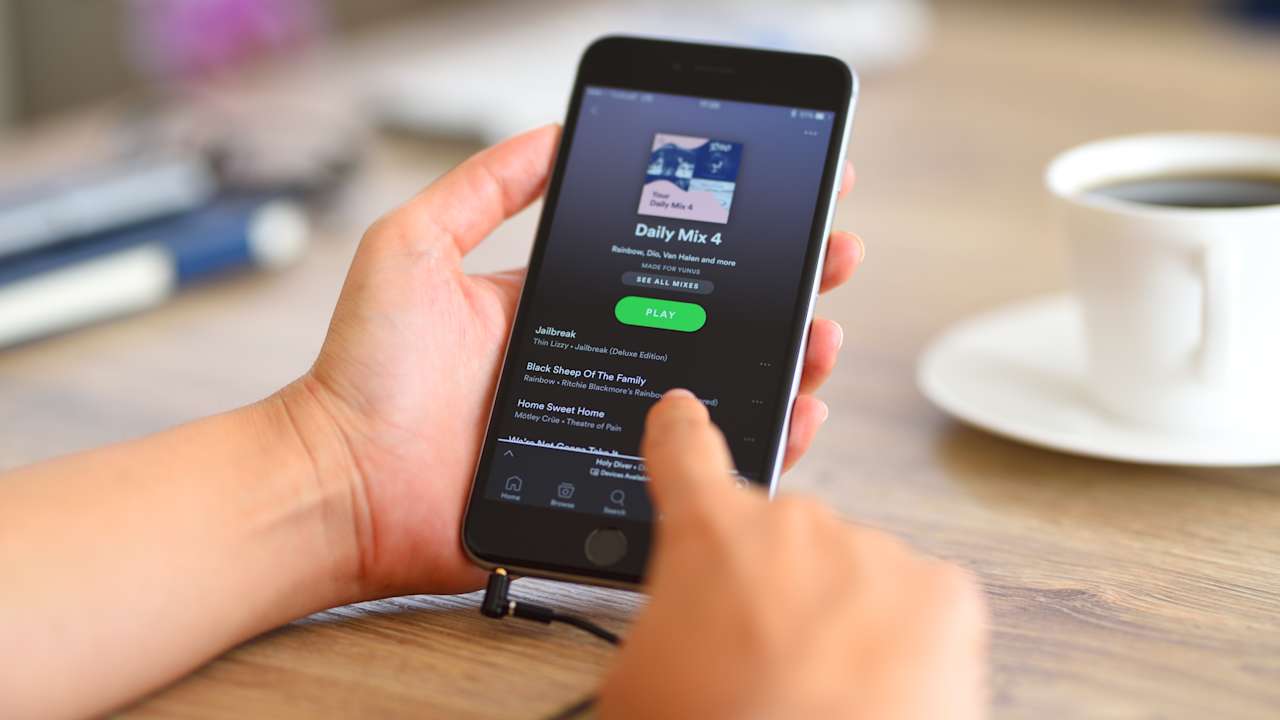

The music streaming app has begun asking its users to verify if they are aged over 18 by using facial age estimations and ID verification.

On its website, it reads: “You cannot use Spotify if you don’t meet the minimum age requirements for the market you’re in.

“If you cannot confirm you’re old enough to use Spotify, your account will be deactivated and eventually deleted.”

This has sent many on social media into a flurry, with one user saying, “the fact that you need to verify your age or have your account deleted really shows what’s wrong with the world right now”.

The Open Rights Group, which campaigns for digital freedoms, said: “Bad law makes for bad, incoherent outcomes.”

When will Spotify use an age check?

Spotify has told ITV News that users may be prompted to complete an age check for certain age-restricted content, for example, when trying to watch a music video that has been labelled 18+ by its rightsholder.

However, it is not clear exactly how consistently the measures are being applied.

While some social media users have shared screenshots of their age verification requests on the app, others have said they have not been asked yet.

How does it work?

Spotify will first ask users to verify their age through facial recognition. Users must take a selfie, which will be analysed using face-scanning technology from verification service Yoti to estimate their age.

If the system determines a user is underage, their account will be deactivated.

However, Spotify will offer a 90-day grace period. During this time, users will receive an email allowing them to reactivate their account, and then they must complete an ID verification within seven days.

To complete ID verification on Spotify, tap your profile picture at the top of the app, go to Settings and Privacy, then select Account, and tap Age Check.

If Spotify still can’t confirm a user’s age during the 90-day grace period, or if no action is taken within seven days of reactivation, the account will be permanently deleted.

Spotify has said its service is designed for users aged 13 and over, but it hosts songs and music videos aimed at mature audiences.

Last month, The Times reported that the app had also hosted pornographic podcasts, despite Spotify’s ban on “sexually explicit content”.

Spotify is the latest tech firm to roll out age checks in a bid to stop children from accessing adult material.

The move follows new rules introduced under the UK government’s new Online Safety Act, though Spotify has told ITV News: “Age assurance has been live as of the last few weeks, and is not implemented solely because of any one law.”

As of last Friday, tech firms must verify the age of users trying to access pornography and other adult content, such as graphic violence. They must also enforce age limits set out in their terms of service.

The new rules have already triggered changes. Porn sites now require age verification, while platforms such as Reddit and X have added age checks on some posts and videos.

Companies that fail to comply risk fines of up to 10% of their global turnover and fines of up to £18 million.

Ofcom investigates pornographic companies

This all comes as Ofcom announced on Thursday that it has launched investigations into 34 pornography websites over concerns they may not be complying with the new age-check rules under the Online Safety Act.

The regulator said it had opened formal investigations into whether companies including 8579 LLC, AVS Group Ltd, Kick Online Entertainment SA and Trendio Ltd had “highly effective” age verification systems in place to stop children accessing pornography across 34 websites.

Ofcom said it prioritised these companies based on the level of risk their services posed and the number of users they attract.

These new cases add to 11 investigations already under way, including probes into 4chan, an online suicide forum, seven file-sharing services, First Time Videos LLC and Itai Tech Ltd.

Wikipedia takes action against government

Meanwhile, Wikipedia has launched legal action against the UK government over the Online Safety Act.

The Wikimedia Foundation (WMF), the non-profit that runs the site, argues that certain regulations under the law, which classify Wikipedia as a “category one” service, should not apply to it.

Under the act, a company falls into category one if it has content recommender systems and 34 million UK users a month, or if it combines such systems with share functions and has at least seven million users monthly.

Wikipedia says that if forced to comply, it may have to either limit the number of users on its site or impose verification on users who don’t want it, a move it says would go against its principles.

In court last week, WMF’s barrister Rupert Paines said the new rules would require platforms to verify users and filter out content from those who aren’t verified.

He warned this could render Wikipedia articles “gibberish” unless all users were verified, and noted that many editors rely on anonymity to avoid online harassment or hacking.

Follow STV News on WhatsApp

Scan the QR code on your mobile device for all the latest news from around the country