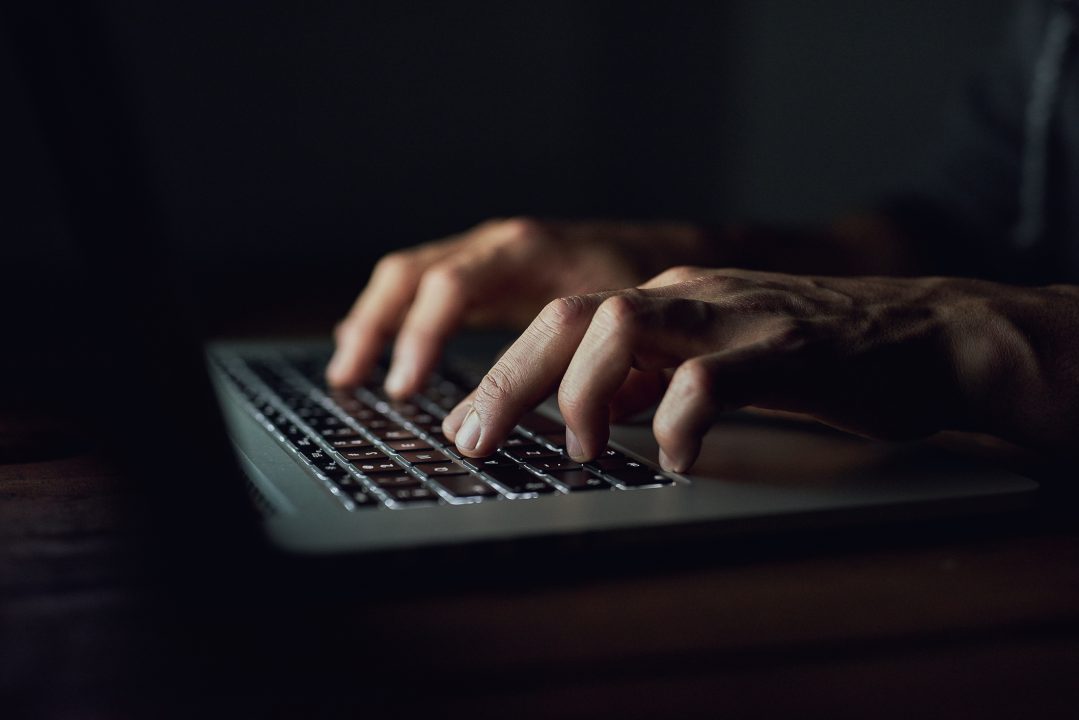

Child protection organisations would be able to test artificial intelligence (AI) models under a proposed new law to prevent the creation of indecent images and videos of children.

In what is being described as one of the first of its kind in the world, the change in the law would allow scrutiny of AI models by designated organisations to stop them generating or spreading child sexual abuse material.

Under the current UK law – which criminalises the possession and generation of child sexual abuse material – developers cannot carry out safety testing on AI models, meaning images can only be removed after they have been created and shared online.

The changes, due to be tabled on Wednesday as an amendment to the Crime and Policing Bill, would mean safeguards within AI systems could be tested from the start with the aim of limiting the production of child sex abuse images in the first place.

The Government, which said the changes “mark a major step forward in safeguarding children in the digital age”, said designated bodies could include AI developers and child protection organisations including the Internet Watch Foundation (IWF).

The new legislation would also enable such organisations to check that AI models have protections against extreme pornography and non-consensual intimate images, the Department for Science, Innovation and Technology said.

The announcement came as the IWF published data showing reports of AI-generated child sexual abuse material have more than doubled in the past year, rising from 199 in the 10 months from January to October 2024 to 426 in the same period in 2025.

According to the data, the severity of material has intensified over that time, with the most serious category A content – images involving penetrative sexual activity, sexual activity with an animal, or sadism – having risen from 2,621 to 3,086 items, now accounting for 56% of all illegal material, compared with 41% last year.

The data showed that girls have been most commonly targeted, accounting for 94% of illegal AI images in 2025.

The Government said it will bring together a group of experts in AI and child safety to ensure testing is “carried out safely and securely”.

The experts will be tasked with helping to design safeguards to protect sensitive data and prevent any risk of illegal content being leaked.

Technology Secretary Liz Kendall said: “We will not allow technological advancement to outpace our ability to keep children safe.

“These new laws will ensure AI systems can be made safe at the source, preventing vulnerabilities that could put children at risk.

“By empowering trusted organisations to scrutinise their AI models, we are ensuring child safety is designed into AI systems, not bolted on as an afterthought.”

Minister for safeguarding Jess Phillips said: “We must make sure children are kept safe online and that our laws keep up with the latest threats.

“This new measure will mean legitimate AI tools cannot be manipulated into creating vile material and more children will be protected from predators as a result.”

Kerry Smith, chief executive of the IWF, said: “AI tools have made it so survivors can be victimised all over again with just a few clicks, giving criminals the ability to make potentially limitless amounts of sophisticated, photorealistic child sexual abuse material.

“Material which further commodifies victims’ suffering, and makes children, particularly girls, less safe on and offline.

“Safety needs to be baked into new technology by design.

“Today’s announcement could be a vital step to make sure AI products are safe before they are released.”

The NSPCC said the new law must make it compulsory for AI models to be tested in this way.

Rani Govender, policy manager for child safety online at the charity, said: “It’s encouraging to see new legislation that pushes the AI industry to take greater responsibility for scrutinising their models and preventing the creation of child sexual abuse material on their platforms.

“But to make a real difference for children, this cannot be optional. Government must ensure that there is a mandatory duty for AI developers to use this provision so that safeguarding against child sexual abuse is an essential part of product design.”

Follow STV News on WhatsApp

Scan the QR code on your mobile device for all the latest news from around the country

iStock

iStock